TLDR; hit each of GCP's 29 regions once and send batched inputs to save time and work around rate limits

Gemini 1.5 flash is great: fast, effective and accurate. What isn't great is the default 1/request/region rate limit for it.

Imagine now, you have >40,000 articles and 42 requests to the api for it. How long would it take, well.. 1,680,000 minutes or roughly 3 years and 2 months.

Tinkering with this rate limit requires a sales team to approve your request and well a student working off credits doesn't score that too easily.

What's the fix?

- Region switching: A cool bypass that instigated the writing of this blog

- We have rate limits per region but once key thing I overlooked getting to work was that Google's Cloud Platform gives you 29 regions to choose from.

- So, if you're hitting the rate limit in one region, switch to another. This is a great way to get around the rate limits and get your data faster.

- Batch processing: Gemini listens to instructions better than every model I've used including the pro version from the same family.

- To use this to my advantage, I decided to send 60 articles/request(more for sub-nodes, but that's for another blog).

- I simply prompted gemini to answering in json and provided my database identifier from the article to the model.

Putting it all together

How It Works

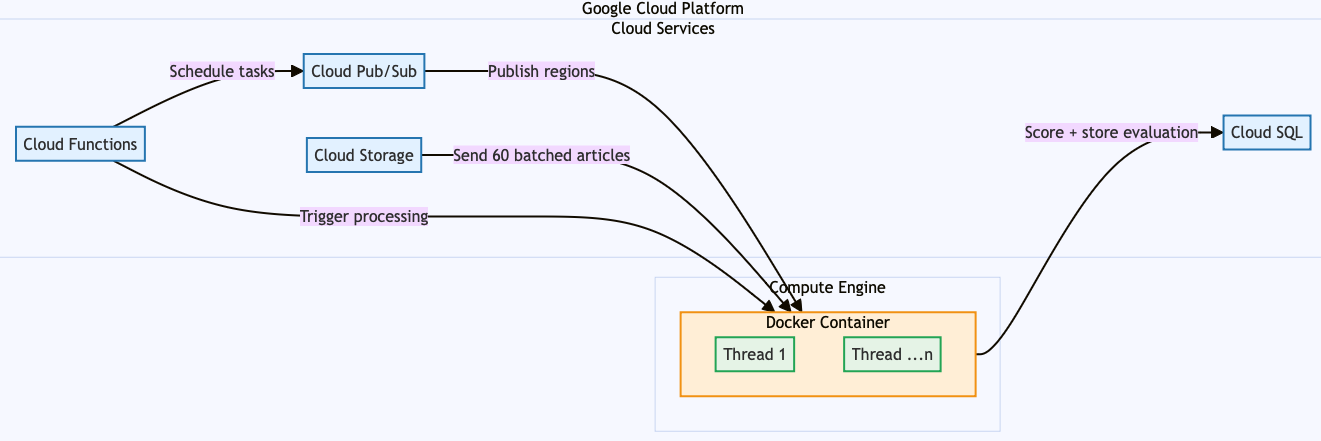

- Cloud Functions sets the wheels in motion, sending messages to Cloud Pub/Sub, specifying which region should process the batch.

- Cloud Pub/Sub sends these region-specific messages to Docker containers running on Compute Engine.

- Cloud Storage delivers batches of 60 articles for each prompt to the appropriate Docker containers.

- Docker containers process the articles and send the results to Cloud SQL for evaluation and storage.

- Cloud Functions schedules and triggers the next batch of tasks to repeat the cycle.

The Game-Changing Results

Here’s where things get exciting. With this setup:

- Requests per minute: 29 (one per region)

- Articles per request: 60

- Articles processed per minute: 1,740

- Articles processed per hour: 104,400

- Articles processed per day: 2,505,600

- Articles processed in 3 years and 2 months: 2,923,200,000

That’s a whopping 1,740x increase in processing speed. And the ironic part? It’s all thanks to Google Cloud solving an issue I ran into with Google Cloud.